Master Data Management in ERP Transformation

Effective ERP systems rely on accurate and consistent master data. It forms the foundation for all transactions, processes, and analytics. Customers, suppliers, materials, cost centres, and organisational structures are just a few examples of master data that underpin how an organisation functions. When this data is flawed or fragmented, it introduces friction at every level. Errors multiply, decisions get distorted, and operational efficiency suffers.

How to manage master data that enables an efficient ERP system, and will not risk the transformation itself?

Juuli Semi

Juuli is a Business Engineer with a strong background in data-driven process development, particularly within the procurement domain. Before Vuono Group, she worked at Metsä Group on ERP transformation, focusing on testing the system, training users, and improving business processes.

Take Control of the Master Data Early

ERP implementation is an opportunity to clean, standardise, and take control of the master data, but it cannot happen in the middle of a high-pressure project. Waiting until design or migration phases introduces risk and rework that can easily derail progress. Instead, integrating data preparation into the overall implementation strategy, with enough lead time to resolve historical inconsistencies and aligning on a standard structure that reflects how the organisation operates today, is crucial.

It is the business, not the IT function, that must drive this effort. Master data is not a technical detail; it represents how the organisation sees itself and how it executes its strategies. It affects areas such as pricing, sourcing, reporting, financial control, and compliance. Business units must take ownership of the data relevant to their operations, define how it should be structured and maintained, and commit resources to ensure it is ready for the new system.

“Effective master data management is not just a technical task; it’s safeguarding your most valuable business asset, and managing it with the same rigour as processes, systems, or budgets.”

The Cost of Neglect: When Data Debt Derails Go-Live

As ERP programmes move into the Execution phase, master data becomes a central determinant of whether timelines hold, testing succeeds, and the solution performs as intended. Any issues that were underestimated or deferred during preparation begin to surface in system build, validation, and cutover planning. If data is incomplete, inconsistent, or misaligned with the new system design, it disrupts progress not through technical faults but through business-level breakdowns.

The impact is immediate. End-to-end testing cannot proceed if sales orders fail due to missing customer records or if materials are incorrectly structured. Financial reconciliation stalls when cost centres are misclassified or unmapped. These are not abstract system issues but real operational barriers caused by data that does not reflect the way the business runs.

One of the most common pitfalls is the belief that data preparation can evolve organically during the Build phase. In practice, legacy data is rarely clean or structured: it typically contains years of accumulated inconsistencies, local variations, and workaround entries. Solving these issues before migration is crucial, not retrofitting them during system testing. Without a proactive and structured approach, master data quickly becomes a bottleneck rather than an enabler.

Differences in how regions or business units define products, customers, or hierarchies often emerge during execution. For example, the same material number may refer to different specifications in different countries, or sales regions may define customer tiers inconsistently. If not harmonised, these discrepancies complicate integration, erode trust in outputs, and can even lead to rework in process design.

Real-Life Example

Retailer's ERP implementation faced significant data management challenges when the system required accurate information about thousands of products, including descriptions, pricing, and supplier details. However, rapid store rollouts and underestimation of complexity led to significant data integrity problems. The company had to manually enter information for 75,000 products, with an investigative team later finding data accuracy was only 30%.

Inaccurate data caused discrepancies in inventory records, pricing errors, and product order issues. Problems included shipments being held up because products couldn't fit into containers as expected, or missing tariff codes due to poor data entry guidelines. The system's failure to provide accurate inventory data meant stores couldn't respond to customer demand, leading to empty shelves and customer dissatisfaction.

The key lesson: master data quality must be established before system build, not retrofitted during implementation under timeline pressure.

The Roadmap to Successful Master Data Management

To avoid these outcomes, organisations need a deliberate and practical approach to master data management during execution. The first step is to establish clear business ownership for each data domain. Ownership should reside with individuals or teams who understand both the operational relevance of the data and the implications of system structure, which often means assigning data stewards within business functions who are accountable for reviewing, cleaning, and validating data within their domain. They must also have the authority to make decisions and resolve cross-functional conflicts where needed.

Defining quality standards early and aligning them with business needs and system requirements is crucial. These standards need to specify what constitutes complete and valid data for each domain, including mandatory fields, format consistency, naming conventions, and reference data alignment. This provides a baseline against which progress can be measured and testing dependencies assessed.

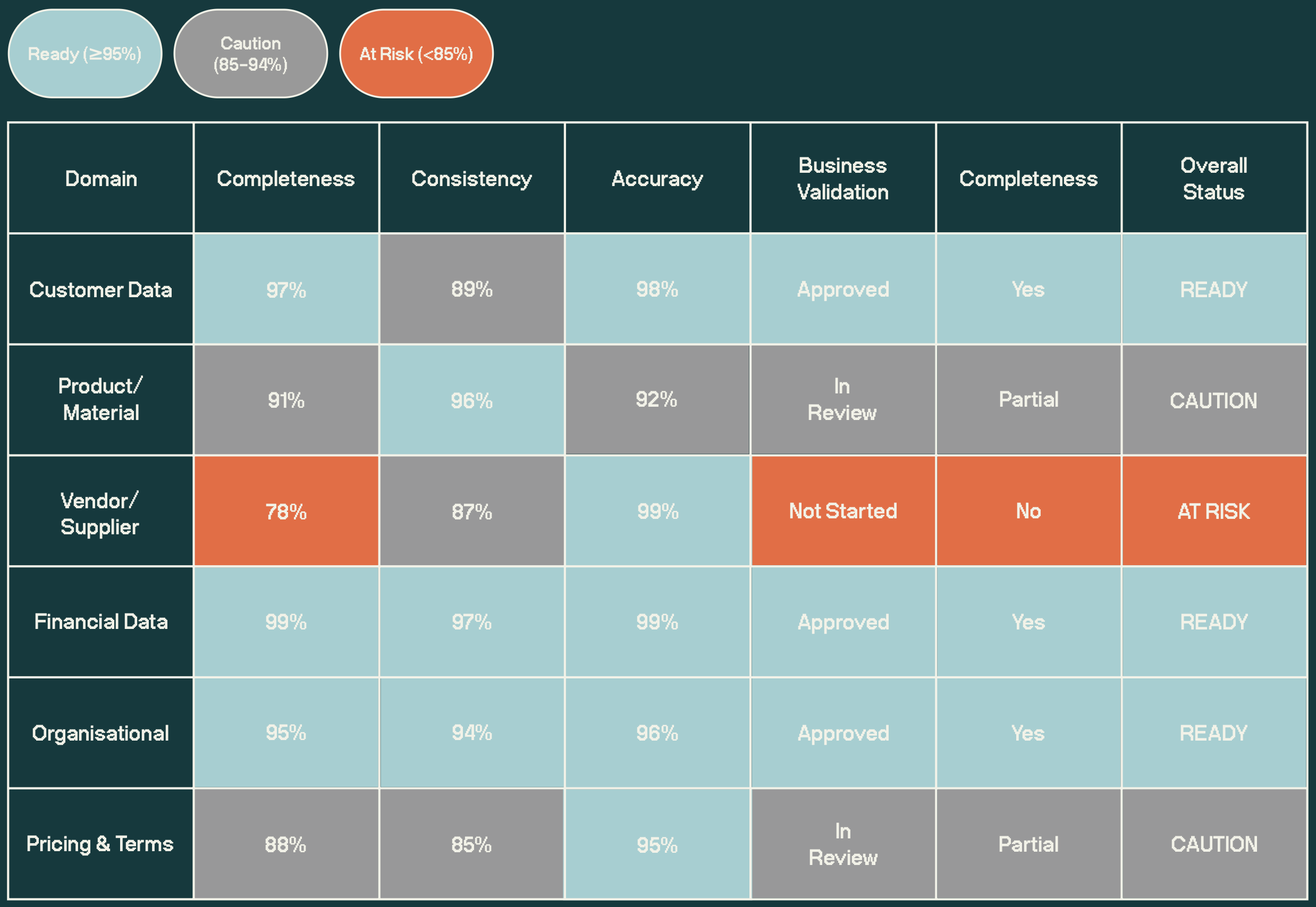

Governance structures must support this ownership, which includes setting up a master data council or similar forum where key stakeholders across functions and geographies can review progress, make decisions, and resolve exceptions. Data quality dashboards, issue logs, and structured review cycles help ensure that issues are identified and addressed in time. Rather than formal checklists, many organisations benefit from lightweight readiness scorecards that track each domain’s progress across key criteria such as completeness, alignment, validation, and approval. These tools provide visibility and accountability without becoming administrative burdens.

Example of the Master Data Readiness Dashboard

Master Data Readiness Checklist

Ownership defined: Each data domain has a named business owner with decision-making authority.

Governance in place: Have clear roles, processes, and escalation paths for resolving data issues.

Quality standards agreed: Establish definitions for completeness, consistency, and accuracy.

Data cleansed and harmonised: Resolve duplicates, obsolete records, and regional inconsistencies.

Business validation completed: Critical data sets are reviewed and signed off by business leads.

Readiness tracked: Monitor progress with dashboards or checklists to manage risks actively.

Tech and Human Together

Technology, particularly artificial intelligence, increasingly plays a role in accelerating this work. AI-powered tools can automate much of the initial profiling and cleansing effort, identifying duplicate records, conflicting structures, and anomalies in naming conventions. Machine learning can suggest transformation rules based on historical data, while natural language processing can help classify and standardise unstructured legacy entries. These tools do not replace business oversight but act as enablers that reduce manual work and highlight issues early.

That said, tools are only effective when paired with strong governance and engagement. AI cannot make judgment calls about how to define a product or which customer attributes are meaningful; only people close to the business can. Ensuring that those people are involved, empowered, and supported is the real success factor.

Investing in Master Data Management Early Will Pay off

Effective master data management is not just a technical task; it's safeguarding your most valuable business asset, and managing it with the same rigour as processes, systems, or budgets. Companies that invest in master data early in the ERP journey gain speed, reliability, and clarity later in the program. Those who neglect it often find themselves fixing the foundations when they should be delivering value.

Ultimately, your ERP system is only as smart as the data it holds. And when you treat your data as a strategic foundation—not an afterthought—you don't just transform your system; you transform your business.

Want to discover more experience-based insights on business-led ERP transformation?